I love new technology. I live for it. I spend inordinate amounts of money on it, much to my son's delight, my daughter's bemusement, and my wife's chagrin. If there is an electronicomechanical way of doing something that I could have done manually, well then, I'm on it. However, when it comes to Voice Recognition, I do an about-face from my progressive side and become a pure Luddite. But follow my reasoning, and you might agree.

First off, the

Wikipedia informs us that "Voice Recognition" is the task of recognizing people from their voices, and this might be done electronically for identification purposes, among other tasks. What we are really interested in is

Speech Recognition which the

Wiki says "is the process of converting a speech signal to a set of words, by means of an algorithm implemented as a computer program." Now, this has been one of several Holy Grails for computer science since the dawn of time.

SR should be great for radiology, given that our main product is the typed report that is based on our talking into a microphone all day long. The old timey approach was to have a human listen to all that blather and type a report into a typewriter (remember those?), or into some computer or another. Now, if I can get said computer to actually understand what I'm saying, we eliminate the middleman, or woman, i.e., the transcriptionist. But is the technology there yet? In 1968, the movie

2001: A Space Odyssey told us that we should have had conversant, artificially-intelligent HAL 9000's, as well as commercial space flight, a Hilton hotel on a space station, a moon base, and exploration of Jupiter as of 5 years ago. Oh well. We don't even have Pan Am anymore. But, we do have VR, I mean SR, right? Here's a citation from a Journal of Digital Imaging article out of Mass General by Mehta and Dreyer, et. al.:

Voice recognition--an emerging necessity within radiology: experiences of the Massachusetts General Hospital.

Mehta A, Dreyer KJ, Schweitzer A, Couris J, Rosenthal D.

Department of Radiology, Massachusetts General Hospital, Harvard Medical School, Boston, USA.

Voice recognition represents a technology that is finally ready for prime time use. As radiology services continue to acquire a larger percentage of the shrinking health-care dollar, decreasing operating costs and improved services will become a necessity. The benefits of voice recognition implementation are significant, as are the challenges. This report will discuss the technology, experiences of major health-care institution with implementation, and potential benefits for the radiology practice.

Great article, and it was written in

1998! The italics are mine, by the way. So here we are, eight years later; why isn't everyone using VR/SR?

The problem seems to be that the technology really isn't there yet. Matrad6781 posted this on an

AuntMinnie.com forum, and it tells the story in a painfully accurate manner:

My problem with our voice recognition system is that commands that used to be triggered by the thumb on a microphone are now voice commands. Often the software doesn't recognize my voice and I end up having to repeat myself several times. That never happened in the "buggy whip" days. Throughout my department you can hear radiologists saying: "Defer report...defer report...DEFER REPORT, dammit!" or "delete that sentence, DELETE that sentence, delete that SENTENCE!" Also, when I say "parentheses" or "quote" or "paragraph", my transcriptionists know what I mean. This system actually types out the words "parentheses', "quote", "unquote", etcetera. How clever is this? Is this what is meant by artificial intelligence? I have alot of "canned" reports, both normals and intro paragraphs for MRI protocols, interventional procedures, etc. At last count, I had 150 such "normals." Before voice recognition, all I had to dictate was, "Normal MRI of the left knee" and the transcriptionists called it up from their macros and sent it for my electronic signature. Now I have to remember the name I gave the normal report (ProVox calls them "Macros") and enunciate it properly (so I don't get a "Normal chest" appearing when I said "Macro Normal CT Chest Enhanced". But it happens, all too frequently. And I have to always keep an eye on the voice dictation window, the way you would a toddler to make sure it's doing what it's supposed to and not getting into trouble. By the way, that window, even when minimized takes up valuable real estate from my PACS work station (and I have four monitors!) I'm always moving the window around because it's obscuring images or the worklist. Very inconvenient. The worst is when the system thinks that I (or someone in the background) uttered a voice command that is one of the "nuclear option" commands, like "finalize report", "delete that paragraph", "cancel report." Then, poof, five minutes of dictation are gone, just like that, and I have to start from scratch. Are you guys telling me no one else has experienced any of these problems? Is it just our manufacturer? Lastly, the voice recognition is so bad, that everything ends up getting deferred by all the radiologists to the transcriptionists, so that they can correct the errors before sending to the task list for finalization. So we have as many transcriptionists as before. Last point: We have some great transcriptionists who catch errors that a voice recognition system would never recognize. I used to get electronic notes like "You said LEFT in body but RIGHT in impression." Or: " You gave a measurement of 3.5 mm in the body, are you sure you meant "mm" and not not "cm"?" These folks have saved my butt many times. I'm kind of glad that the voice recognition dictations still go through them as a fail safe mechanism. Also, one of our transcriptionists makes really good carrot cake! Let's see a VR system do that!

The bottom line is that these very expensive systems (think hundreds of thousands of dollars) do not have human intelligence behind them. No, I'm not saying bad things about the programmers! It's simply that there is a very rich background to our communications, and no machine has risen to the level of understanding, or perhaps I should say intuiting, what we put into it. The left/right and cm/mm problems are good examples. I suppose you could program the machine to count how many times you say "left" and how many times you say "right", but then what? Should there be an equal number? Not necessarily. So what is a poor machine to do? The human transcriptionist can easily find the discrepancies of this sort, but a machine just can't do that yet.

What are the advantages of SR that make it worth the kind of trouble Matrad describes? I can think of only two: time and money. If all works well, the SR system can have a report available online the instant you sign off. That's a good thing. Which can be equalled or even surpassed by having an adequate pool of human transcriptionists on-line and ready to do their thing. But speed doesn't seem to be the main impetus in many places. Sadly, what SR allows is the shifting of work onto the radiologist. The transcriptionist's job is really two-fold: she (or he) commits to the screen what the rad has dictated, and then she edits out the errors that may have occured. SR can do a passable job of typing (if one trains it to one's voice for a very long time), but it just can't do the editing. Since the radiologist is ultimately responsible for the report, some administrative types have decided that the rad should do the editing as well! Wonderful idea, if you are trying to rid yourself of transcriptionists. Unfortunately, this adds a lot of editing time to the rad's day that he or she should be spending reading studies. Another poster, Jack (Dr. Death) Kervorkian puts it in these terms:

(S)orry guys, in my book - time is money. If I have to spend 20% of my time correcting reports and looking for content, syntax, grammatical and/or spelling errors, that is 20% which I am not productive. Furthermore, it now makes me not a radiologist, but an editor of reports. Just think, in an 8-10 hour day that amounts to an extra 1-2 hours of agonizing editing. I'd rather have a second set of eyes and ears - which know my dictation style to look over me. Can't tell you how many times a transcriptionist has saved my ass from looking stupid, with the usual 'right/left" errors, or at the 12th hours calling a CT scan an MRI scan.. Although voice recognition will catch spelling errors, it won't catch content, grammatical or syntax errors, as mentioned above.

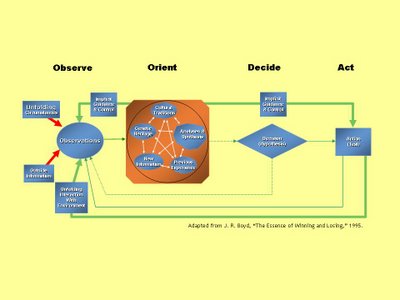

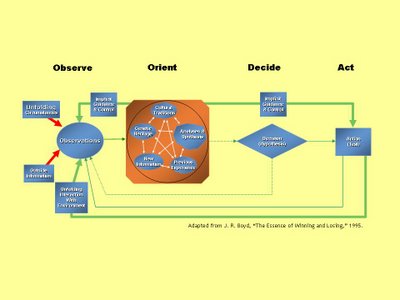

So everyone loses in this scenario, except for the bean-counters who justify the expense and the pain to the rads with the savings in personnel. Just great. Those who support SR call those of us with doubts "Luddites" and "buggy whip makers". AuntMinnie member Frank Hartwick suggests that we stick to the principles of the "OODA Loop" for decision making such as this to keep from being left behind. OK, what's an OODA loop, you ask...is it like a Fruit Loop? Not quite, and it really is germaine to this discussion. Click on the diagram below to blow it up...

The OODA loop (Observation, Orientation, Decision, Action) is simply a way to describe a decision-making cycle, designed by a retired fighter pilot, Col. John Boyd. Basically, it describes a dynamic process that one should go through to evaluate evidence and make choices, and how those choices are dependent on your background (even your "genetic heritage") and the information you get. The decision impacts your observations, changes your orientation toward the problem, and you remake your decision.

Got all that? So, I think Frank H's OODA loop tells him that SR gives good service and should be implemented. But MY OODA loop observation is that there are a lot of complaints about SR, and with my genetic heritage of worrying, and previous experiences of getting burned on buying some of the latest and greatest, lead me to the decision that SR isn't ready for me as yet, and thus the action of, well, inaction. I'm digging in my heels on this idea. I have told our administration that I and my group will not accept SR unless they promise us total human backup. In other words, if they want to spend $300,000 or so on a fancy microphone for me, that's just fine. As long as they realize that we rads refuse to become editors, they can go ahead and spend the cash. But don't wave the expenditure in my face when it comes time to replace some of the worn-out scanners that really need to go.

Am I really being a "Luddite" on this? I think not. My job is to interpret my studies, not edit the reports. No one has yet convinced me that SR technology has reached the point of really being ready for prime-time. And I don't make buggy whips...to really stretch the analogy, one could say we use buggy whips in our trade. SR could then be likened to an electric cattle prod, I suppose. But to carry this to its logical end, SR will eliminate the horse, and I'll be forced to use the cattle prod on myself! No thanks, guys. I'd rather walk.

ADDENDUM....

I just had to add this from a post from William Fife on the AuntMinnie.com thread...

For those of you who love VR here is something one of our physicians did one day on call. I will note that this physician had been using VR exclusively for MONTHS (a few words have been deleted (deleted) to protect the guilty). This is not a joke, this is an actual VR transcription of that the physician was saying (in bold).

Indicating this using (deleted). (I am dictating this using (deleted).)

Mr. date of 01/02 help recent films time.(Yesterday I went to help read a few films on my own time.)

A family cells. In some (deleted). there for over and out or.( I found myself stuck in some (deleted) error for over an hour.)

The year cannot: 5 hours report was (The ER kept calling to find out where the report was.)

This did not seem to encourage may help my colleagues and the films. (This did nothing to encourage me to help out my colleagues and read films.)

Today came in early to try again had.(Today I came in early to try and get ahead.)

Instead I am now line. Out of on the nodule was noted least 15 times. (Instead I am now behind. I had to run the audiowizard 15 times.)

I had to call the past / (deleted) to support. (I had to call the PAC/(deleted) support staff)

12 joules was helpful and white, there was nothing ET tube from home. He has new trials were to things year defects at myself. (While Joe was helpful and polite, there was nothing he could do from home. He asked me to try all sorts of things here to fix it myself.)

Spent there are might time to ingest fat rather than reading films. The CT scanner post I am not expected fixed fat.Lysis any different. (I spent an hour of my time doing just that instead of reading films. When the CT scanner goes down I am not expected to try and fix it myself. Why is theis any different?)

The patient or best served 1 international unit films not tried fixed (deleted) problems were transcribed unknown reports for distended noxious females. (Patients are best served when I read films, not try to fix (deleted) problems or transcribe my own reports or send obnoxious e-mails.)

Kilohertz it is line, we need better on slight support. (When it works, it is fine. When it doesn't work, we need better on-site support.)

ADDENDUM #2:This was just posted to AuntMinnie.com by "breastguy", hopefully a mammographer, and I think it really seals the deal against SR:

I heard of one rad at a children's hospital in PA who was trying to get a lawsuit together against Dictaphone for their false claims about the product. Every so often our chief circulates examples of gibberish that was sent out and cautions us to look at what we are siging - but it still happens -- one major problem is there is no "undo" button or delay- once you hit sign report it is gone and as it disappears you see "clitoral history" fly by and there is no delay to catch it. The quality of the reports clearly suffers. And you cant say "known Carcinoma" it comes out "No carcinoma" -- I curse the Powerscribe apps people that spent days with us yet never called attention to possible pitfalls like this - they never pointed out the weaknesses- we had to stumble over them ourselves. Sure you can save the reports and review them later, or send every one to the editor (be sure to keep some transcriptionsits to edit) but I can tell you, in our large group , with editors, crap still gets sent out and ref docs gleefully call you up to say "Did you mean Clinical History instead of Clitoral History?" Certainly makes us look foolish. As far as it not understanding the instructions to call up a macro- it was driing me crazy! I solved that - instead of my saying "Mammo Heterogenous Normal Compared" I renamed it "Hubert Nancy Carol" Dense mammo is " Denise Nancy Carol" etc and so on for the many screening mammo macros I created- here it does save time.

I really think that's enough. Frankly, after looking into this, I'm inclined to say "NO SR" altogether. The machines seem to compound the possible errors, making the transcriptionist/editor's job that much harder. This is a really, really, REALLY bad idea.