In reading

last year's RSNA report, I was struck with just how little has changed.

Here I am this year, 2017, and here's how I looked at RSNA 2016:

A little grayer, perhaps a pound or two more. But otherwise same ol' Dalai. And same ol' RSNA. I even manned the RAD-AID booth again:

Yes, I tied the bow-tie all by myself.

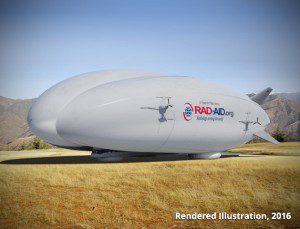

This is a model housed at the Bayer booth of the airship RAD-AID hopes to use to bring imaging to underserved areas; I think the official rendering is much more impressive, and maybe even a little, well,

buxom:

I'm still lobbying for a seat on the first flight. Did I say

buxom? I meant

handsome!

I did attend the requisite PET/CT and SPECT/CT lectures. Once again, I was impressed by the fact that I have a better SPECT/CT scanner (Siemens Symbia Intevo) than some of the BIG NAMES in Nuclear Medicine who are out there giving the lectures. Of course, with their knowledge and expertise, they can probably get as much information out of their Hawkeye SPECT/pseudoCT scanner than I can from my advanced instrument, but

they aren't available down in the boonies where

I practice...

Yes, yes. I know. Get to the point, Dalai!

What about AI!?

You probably know by now that AI dominated RSNA, even more so than last year. Here is a photo of the average attendee trying to get into one of the packed AI lectures:

If you count residency, I've been in this business since 1985, over 32 years. I've seen the rise of MRI, multi-slice CT, PET/CT, PET/MRI, SPECT/CT, PACS, EMR, Digital Everything, endoscopy, DRA(eck) 2005, "value-based" imaging, Imaging 3.x (a.k.a. "We're Doctors Too!!"), Image Gentlemanly, Meaningless Use, and other revolutions. I've seen the fall of film and the decline of barium. It's been quite a ride. But I've never, ever, EVER seen the level of interest, well, more accurately, fear, trepidation, anxiety, paranoia, and sheer terror that AI has inspired. The draw for AI lectures seemed not unlike the morbid compulsion to stop to look at a really bad car wreck. I don't think a live mud-wrestling match between Trump and Hillary would draw even half the audience.

I found the whole thing quite amusing, really. There were crowds at any talk with a title or description or anything at all that suggested AI; if

this talk had been at RSNA, I'm sure it would have attracted hundreds. I did find a rough dichotomy in the AI talks. There were those which talked about the mechanics of AI and Machine Learning, covering all sorts of things like Convoluted Neural Networks; you could literally hear crystalline tinkling of the eyes of the crowd glazing over as the talks progressed further and further into the very complex weeds. And then there were the sessions more applicable to the riff-raff such as myself, who just want to know where we are with AI relative to radiology. Of course, the picture wouldn't be complete without a chat with a couple of the vendors who are, ummmm,

deeply embedded in this space.

If you are the type to skip to the last page of the book, I'll save you the trouble. Here are the punch-lines of this entire article: AI is still not taking over. AI will be a tool to assist radiologists, not replace them. Radiologists who embrace and use AI will excel over those who don't. And finally...radiologists should help develop (and thereby control) AI's for our use. You can now go back to sleep.

I'm not going to try to recapitulate the technical talks about Artificial Intelligence (some are suggesting we call it Augmented Intelligence instead, but that evokes thoughts of another kind of silicon/silicone) and Machine Learning. There are about a zillion resources out there that will do a far better job than I ever could on these pages. Try

THIS article from Radiographics as a starting-point. The more practical talks (for us out here in the boonies) were a little more reassuring. There were certain trends noted. First, when it comes to AI and surrounding hype, we appear to be at the "Peak of Inflated Expectations" as per the graph below, which you've probably seen before:

And of course you've all see these by now...

There are those, virtually ALL non-radiologists, and most from the world of AI, who are preaching the imminent demise of Radiology (if not Humanity), which is to me the most blatant example of "Inflated Expectations". This list of meanies is topped by Geoffrey Hinton from Google:

(I far prefer the views of Dr. Eliot Siegel, pictured to the right, who NOT a meanie, and is much more optimistic about our future, and considerably more believable as he has spent quite a few years researching AI in radiology.)

Other nay-sayers include Andrew Ng, currently out of Stanford, recently of Baidu AI and also with Google connections. He has stated that AI would take over Radiology:

...but his Stanford group only recently published a rather flawed

paper on a non-peer-reviewed site, claiming that their AI could outdo humans in diagnosing pneumonia on chest radiographs. And let us not forget Ezekiel Emanuel, M.D., non-radiologist physician brother of Hizzoner Rahm, who pushes Single Payor and seems to hate radiology in particular, stating in the

New England Journal of (Esoteric) Medicine that radiologists will be replaced by computers within 5 years. Bah Humbug!

Typical of doomsaying articles is this recent piece in

The Economist, which wildly extrapolates from very limited data, suggesting that an AI that can operate at the level of a human radiologist absolutely, positively

will bring about amazing things which will displace radiologists, and naturally stipulates that all this is imminent. Of course, the author neglects the minor problem that no such machine exists.

Notice a trend? Those who are pushing this meme are not radiologists, and I submit they do not grasp what we do beyond "lookin' at the purty pitchurs".

But back to RSNA. The radiologist-centric take-home message was best voiced by Dr. Keith Dreyer in a very well-crafted

talk:

- Radiologists and AI will be far better together then either one alone

- Our biggest challenge has been the lack of an AI-ecosystem

- Limiting AI - creation, validation, approval, integration, surveillance, adoption

- We see an AI future that is very bright for radiology and radiologists

- ACR is working with radiologists, industry, and government to create the future

Dr. Dreyer did state rather explicitly that the ACR would be our prime resource in this realm, guiding AI standards that will maintain its functionality as a tool to help us improve patient care, and I'm quite willing to accept their guidance. Dreyer notes that while machines are growing intelligent more quickly than we humans can manage, we are still better off working together (my comments on that later) and suggests this as our combined "evolution":

Here are the potential feedback loops for the human-AI hybrid:

Keith ended the talk with what is perhaps the most profound slide to have ever been shown at RSNA:

Absolutely indisputable.

A few vendors were more into the hype than the speakers:

The only even mildly threatening booth was from Deep Radiology, which consisted of a few benches and a monitor showing a continuous loop of a Deep Radiology

stooge scientist droning on about how their system, which no one has ever seen, outdoes human rads. I took a huge chance in shooting this image, as they had a "No Photographs" policy. Like there was something to photograph.

But let's turn now to some of the vendors that at least appear to be delivering, rather than hyping.

I'll start of course with my friends at WatsonHealthIBMergeAMICAS. I guess my venerable AMICAS PACS is now Watson PACS, and when I need it, I don't even have to ask it to come here. (OOPS, wrong Watson!) I was able to visit with our new salesperson, and one of the apps people I've known from the beginning of my relationship with AMICAS. My time was very limited, as I had a roundtable to attend shortly after my appointment (more on that below) and I didn't get to see everything I would have liked, such as the plans for Version 8.x, nor did I see all the Watson AI programs. I

did get to preview some of the more imminent (don't ask me

when) add-ons to PACS. These include more robust analytics that should replace the old AMICAS Watch, utilizing

IBM COGNOS business software. Marktation is coming, which will speed the process of measuring a finding and documenting it in the report.

"

Patient Synopsis," another work-in-progress, is rather like a news-aggregator for radiology. It will glean context-sensitive information from the EMR and present it as a separate pane for you interpretive pleasure. I have to add here that a colleague was with me during this demonstration, and instantly noted that this could conceivably get us in trouble; what happens if Patient Synopsis doesn't pull something pertinent? My response was simple-minded as usual, but I think accurate: Without this, most of us simply don't have the time to mine though the EMR for important little tidbits. At least Patient Synopsis gives us a lot more information than we could obtain practically before.

"

IBM Watson Imaging Clinical Review" is apparently available for use already. It, too, snoops into the EMR, and

Watson Imaging Clinical Review improves the path from diagnosis to documentation, eliminating data leaks caused by incomplete or incorrect documentation. This innovative cognitive data review tool supports accurate and timely clinical and administrative decision-making by:

- Reading structured and unstructured data

- Understanding data to extract meaningful information

- Comparing clinical reports with the EMR problem list and recorded diagnosis

- Empowering users to input the correct information back into the EMR reports

Watson Imaging Clinical Review enables reconciliation of inconsistencies between clinical diagnoses and administrative records. Those inconsistencies that can impact billing accuracy, quality metrics, and an organization’s bottom line.

The original release was exclusively geared toward aortic stenosis. Version 2.0 was shown on the floor which evaluates 24 disease states including cardiomegaly, stroke, and cancer, per IBM.

Finally, there was the "Breast Care Advisor," a system that works in the background of one's mammography PACS, which pre-reads old reports, and then "looks" at the mammographic images themselves, assigning an "intricacy score". The Advisor then prioritizes and triages those studies which need attention, so the patient can undergo any necessary additional testing during the same visit.

But perhaps the most important development at IBMergeWatsonHealth is the chance to get involved in Watson's evolution. I spoke with one of the IBM VP's on this topic, noting that as a PACS customer, we had never been contacted to allow Watson to peek at our patients'

anonymized data. I was promised that this would be remedied, and in fact there will be opportunities to participate in training Watson's various personae. I'll keep everyone posted on this.

I might have missed one of the more promising offerings on the exhibit floor had I not been spotted by my old friend Fred, Master Salesman for TeraRecon. Fred could quite successfully sell ice cubes to Eskimos, and was instrumental in keeping TR on my horizon whilst waiting for my hospital to understand the need for Advanced Imaging. Fred knows absolutely Everyone who is Anyone in the imaging business (although I'm not sure how

I managed to become Anyone). He insisted that I look at

EnvoyAI, which had embarked upon a distributing relationship with TeraRecon, and introduced me to EnvoyAI's CEO, Misha Herscu, and the other two members of the core team, Jake Taylor, and Dr. Steven Rothenberg. There will be many more folks working with them when all is said and done, but I can now say I met them when it all started. Almost, anyway. Fred also fetched Jeff Sorenson, TeraRecon's CEO, with whom I spent a great deal of time, actually closing out the exhibit floor. More on that in a moment. And Fred managed to drop a name you've just heard me mention, Dr. Eliot Siegel, noting that he was on the EnvoyAI Advisory Board, as well as Drs. Paul Chang and Khan Siddiqui. This is an incredible pedigree, making EnvoyAI pretty much instantly worthy of attention.

Misha, who was running on Red Bull and fumes by the time I spoke with him, describes his company as the "Amazon of AI" and that is quite accurate, although I might personally have used "iTunes Store of AI" instead. (When I suggested that AI today is where PACS was 20 years ago, he responded, "I was 6 years old back then." Nurse? Could I have the

green Jello, today, please?) EnvoyAI's vision statement foreshadows the rest of the story: "Our number one goal is to empower physicians by giving them access to the best algorithms available." And that's what they do. At its essence, Envoy is an aggregator. It arbitrates and vets (along with partner TeraRecon) AI algorithms and presents them to the radiologist-user. The folks pushing these AI components were literally lining up at the EnvoyAI booth to get on the roster. Right now there are about 38 algorithms in the system with many more to come. (

Signify Research says there are 14 signed distribution deals with partner companies, with three of the algorithms having FDA clearance. Some of the companies signed-up to the EnvoyAI platform are 4Quant, Aidoc, icometrix, Imbio, Infervision, Lunit, Quibim, Qure.ai and VUNO.)

Here are a few of the algorithms available to date:

- Imbio offers lung density reporting for COPD analysis with chest CT scans. The Imbio CT Lung Density Analysis™ software provides reproducible CT values for pulmonary tissue, which is essential for providing quantitative support for diagnosis and follow up examinations.

- icometrix offers icobrain, an FDA-cleared brain MRI tool that is intended for automatic labeling, visualization and volumetric quantification of segmentable brain structures from a set of MR images. The software is intended to automate the current manual process of identifying, labeling and quantifying the volumes of segmentable brain structures identified on MR images.

- TeraRecon offers iNtuition Time Density Analysis for CT, which supports stroke triage workflow by producing colorized parametric maps of the brain from time-resolved, thin-slice CT scans of the head with contrast, including CBF, CBV, MTT, TTP, TOT, RT map types.

EnvoyAI utilizes these components:

Machine

A medical imaging algorithm in a software container with well-defined inputs and outputs for easy distribution

Developer Portal

Website for building, testing, and sharing machines

EnvoyAI Exchange

Where an end user can buy or test a machine

EnvoyAI Liaison

On site software that communicates with hospitals' scanners, viewers, and either the EnvoyAI Inference Cloud or Inference Appliance

EnvoyAI Inference Cloud

Runs machines in the cloud using de-identified data sent by the EnvoyAI Liaison

EnvoyAI Inference Appliance

Runs machines on site in your data center

EnvoyAI Machine API

A simple way for AI developers to implement their innovations in the EnvoyAI Inference Cloud

EnvoyAI Liaison API

A developer interface that provides an easy way to connect the AI machines you want to the workflow tools you use

iNtuition EnvoyAI Adapter

Allows machine results to be viewed inside of TeraRecon's iNtuition

Interestingly, the system does not use DICOM, but rather moves data around via a

JSON (JavaScript Object Notation) contract for data transmission. Data can be sent to a cloud or to an in-house Inference Appliance if you don't want anything escaping your (fire)walls.

This is where TeraRecon comes in. They have created NorthStar*, the "last mile" of the solution to the AI problem. In Mr. Sorenson's

own words:

It’s time for a fresh approach to artificial intelligence in medicine. By presenting findings and conclusions in a format where the suggestions of many intelligence engines can be considered and accepted or rejected by the physician in real-time, it provides a reward system to the intelligence machine to improve its performance overall. Similarly, the interactions with the image data and intelligence machine findings during routine diagnostic interpretation can be captured for future training of these machines. This requires technology to ensure that the applicable source data is processed prior to interpretation, proper suggestion of applicable intelligence engines has occurred during interpretation, and the physician remains in control of what findings are propagated into their interpretation within the PACS environment.

The technologies required to achieve this future-state machine intelligence workflow are: 1) one or more app stores with intelligence machine content, 2) data transport and machine instantiation technologies to solve the last mile integration into routine clinical interpretations, 3) a viewer or embeddable viewing component allowing interaction with a plurality of machines, findings and observed user behaviors.

TR's website completes the story:

Built from the ground up on a state-of-the-art technology stack, TeraRecon's NorthStar™ viewer is the culmination of more than 20 man-years of effort. It is an AI content-enabled medical image viewer which stands to revolutionize the way physicians incorporate the galaxy of third party AI machines and embed them into their PACS workflow.

NorthStar* allows you to benefit from the assistance of artificial intelligence, but remain in control of which results become a part of the permanent image records and your diagnostic report. Stay in control while you experiment with the future of artificial intelligence.

I had the chance to see NorthStar* in operation, demonstrated by Mr. Sorenson himself, and like any first-pass at something revolutionary, the interface is not yet quite as smooth as I would like. But the potential is very clear.: NorthStar*/EnvoyAI provides a platform that lets radiologists test out various AI algorithms, utilize the results or not as they see fit, and even retrain the algorithm (for their own site or individual use, not for all users). Or, as Dr. Siegel put it, "What we’ve lacked is the communication mechanism that delivers their algorithms to a broad audience allowing clinicians to try out algorithms, while maintaining control over the patient interaction and report.” And now we have it.

In the interest of thoroughness, I should add that Nuance has a somewhat similar platform for their PowerShare network, and Blackford Analysis and

Visage have their own takes as well.

I mentioned above my participation in a round-table on the topic, the specifics of which I cannot really discuss. Suffice it to say that an interested party gathers a group together every RSNA to discuss the controversial topic of the year, and of course AI in Radiology was the clear choice for 2017. I was somehow included in a list of luminaries, and I quickly felt like an 8th-grader who wandered into a Quantum Physics class at MIT. But I held my own in this rarefied crowd of radiologists, executives, and even an attorney; at least there was relatively little eye-rolling and snickering when I offered my humble opinions. I

can tell you that the general feeling was positive for our future. Since I don't have permission to quote the other members, I'll simply tell you what I said. My profound comments were synthesized after a long phone chat with Dr. Siegel, and long (and occasionally adversarial) discussions with friends on Aunt Minnie. Several things are clear to me. First and foremost, AI is a tool, a very powerful tool, but still a tool. It will not take our jobs away. Why? Here, I can only hand-wave, but I think I've hit the answer: Computers do not think. We do. Moreover, we have insight, we dream, we have intuition. Our AI's might someday become very good at identifying stuff, but not at doing whatever it is we do to be radiologists, physicians. Computers don't have empathy, or feeling of any sort (although they can simulate it) and for that reason alone, they will never replace us. I can tell you that no one on the panel was particularly worried about AI taking over.

HOWEVER, there is no denying the tremendous power AI potentially yields, and again, we should embrace it

AS A TOOL. I thought I was quite clever when suggesting that the relationship must be symbiotic, the human and the cybernetic working together as one organism. The old term Cyborg comes to mind. As it turns out, Dr. Dreyer mentioned something similar but a bit more organic in a recent

article: "

Centaur radiologists, by understanding how to work with computers, AI, and ML, combined with their sophisticated clinical knowledge base gained from medical training and experience, can provide more and better information in their interpretations." Emphasis mine.

Integral to the symbiotic/cyborg/centaur approach is the need for interactivity with the process itself. We have to be a huge part of the feedback loop for AI's deployment and training. We MUST keep control of this process, or we'll end up with the same situation we had with PACS: the vendors will create products that sell to the IT and C-Suite folks, but are not optimal for

us. I fear

that scenario far more than any fantasy of HAL taking over the radiological ship.

The lesson for us radiologists is simple. GET INVOLVED. I've discovered two approaches that allow us to do so, and I'm sure there are more. I promise everyone has something to contribute to this process. But if you sit back and watch, you might end up being superseded by those who understand and value this technology.

So, together, we can all...

!